Hadoop is an open-source software framework for storing data and running applications on clusters of commodity hardware. It provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs. Apache Hadoop is used mainly for Data Analysis.

Apache Spark is an open-source distributed general-purpose cluster-computing framework. Spark provides an interface for programming entire clusters with implicit data parallelism and fault tolerance.

The question is Which programming language is good to drive Hadoop and Spark?

The programming model for developing hadoop based applications is the map reduce. In other words, MapReduce is the processing layer of Hadoop.

MapReduce programming model is designed for processing large volumes of data in parallel by dividing the work into a set of independent tasks. Hadoop MapReduce is a software framework for easily writing an application that processes the vast amount of structured and unstructured data stored in the Hadoop Distributed FileSystem (HDFS). The biggest advantage of map reduce is to make data processing on multiple computing nodes easy. Under the Map reduce model, data processing primitives are called Mapper and Reducers.

Spark is written in Scala and Hadoop is written in Java.

The key difference between Hadoop MapReduce and Spark lies in the approach to processing: Spark can do it in-memory, while Hadoop MapReduce has to read from and write to a disk. As a result, the speed of processing differs significantly – Spark may be up to 100 times faster.

In-memory processing is faster when compared to Hadoop, as there is no time spent in moving data/processes in and out of the disk. Spark is 100 times faster than MapReduce as everything is done here in memory.

Spark’s hardware is more expensive than Hadoop MapReduce because it’s hardware needs a lot of RAM.

Hadoop runs on Linux, it means that you must have knowldge of linux.

Java is important for hadoop because:

- There are some advanced features that are only available via the Java API.

- The ability to go deep into the Hadoop coding and figure out what’s going wrong.

In both these situations, Java becomes very important.

As a developer, you can enjoy many advanced features of Spark and Hadoop if you start with their native languages (Java and Scala).

What Python Offers for Hadoop and Spark?

- Simple syntax– Python offers simple syntax which shows it is more user friendly than other two languages.

- Easy to learn – Python syntax are like English languages. So, it much more easier to learn it and master it.

- Large community support – Unlike Scala, Python has huge community (active), which we will help you to solve your queries.

- Offers Libraries, frameworks and packages – Python has huge number of Scientific packages, libraries and framework, which are helping you to work in any environment of Hadoop and Spark.

- Python Compatibility with Hadoop – A package called PyDoop offers access to the HDFS API for Hadoop and hence it allows to write Hadoop MapReduce program and application.

- Hadoop is based off of Java (then so e.g. non-Hadoop yet still a Big-Data technology like the ElasticSearch engine, too – even though it processes JSON REST requests)

- Spark is created off of Scala although pySpark (the lovechild of Python and Spark technologies of course) has gained a lot of momentum as of late.

If you are planning for Hadoop Data Analyst, Python is preferable given that it has many libraries to perform advanced analytics and also you can use Spark to perform advanced analytics and implement machine learning techniques using pyspark API.

The key Value pair is the record entity that MapReduce job receives for execution. In MapReduce process, before passing the data to the mapper, data should be first converted into key-value pairs as mapper only understands key-value pairs of data.

key-value pairs in Hadoop MapReduce is generated as follows:

Advertise with us - Post Your Good Content Here

We are ranked in the Top 20 on Google

AI Dashboard is available on the Web, Apple, Google, and Microsoft, PRO version

Resources:

1- Quora

2- Wikipedia

3- Data Flair

Active Hydrating Toner, Anti-Aging Replenishing Advanced Face Moisturizer, with Vitamins A, C, E & Natural Botanicals to Promote Skin Balance & Collagen Production, 6.7 Fl Oz

Age Defying 0.3% Retinol Serum, Anti-Aging Dark Spot Remover for Face, Fine Lines & Wrinkle Pore Minimizer, with Vitamin E & Natural Botanicals

Firming Moisturizer, Advanced Hydrating Facial Replenishing Cream, with Hyaluronic Acid, Resveratrol & Natural Botanicals to Restore Skin's Strength, Radiance, and Resilience, 1.75 Oz

Skin Stem Cell Serum

Smartphone 101 - Pick a smartphone for me - android or iOS - Apple iPhone or Samsung Galaxy or Huawei or Xaomi or Google Pixel

Can AI Really Predict Lottery Results? We Asked an Expert.

Djamgatech

Read Photos and PDFs Aloud for me iOS

Read Photos and PDFs Aloud for me android

Read Photos and PDFs Aloud For me Windows 10/11

Read Photos and PDFs Aloud For Amazon

Get 20% off Google Workspace (Google Meet) Business Plan (AMERICAS): M9HNXHX3WC9H7YE (Email us for more)

Get 20% off Google Google Workspace (Google Meet) Standard Plan with the following codes: 96DRHDRA9J7GTN6(Email us for more)

FREE 10000+ Quiz Trivia and and Brain Teasers for All Topics including Cloud Computing, General Knowledge, History, Television, Music, Art, Science, Movies, Films, US History, Soccer Football, World Cup, Data Science, Machine Learning, Geography, etc....

List of Freely available programming books - What is the single most influential book every Programmers should read

- Bjarne Stroustrup - The C++ Programming Language

- Brian W. Kernighan, Rob Pike - The Practice of Programming

- Donald Knuth - The Art of Computer Programming

- Ellen Ullman - Close to the Machine

- Ellis Horowitz - Fundamentals of Computer Algorithms

- Eric Raymond - The Art of Unix Programming

- Gerald M. Weinberg - The Psychology of Computer Programming

- James Gosling - The Java Programming Language

- Joel Spolsky - The Best Software Writing I

- Keith Curtis - After the Software Wars

- Richard M. Stallman - Free Software, Free Society

- Richard P. Gabriel - Patterns of Software

- Richard P. Gabriel - Innovation Happens Elsewhere

- Code Complete (2nd edition) by Steve McConnell

- The Pragmatic Programmer

- Structure and Interpretation of Computer Programs

- The C Programming Language by Kernighan and Ritchie

- Introduction to Algorithms by Cormen, Leiserson, Rivest & Stein

- Design Patterns by the Gang of Four

- Refactoring: Improving the Design of Existing Code

- The Mythical Man Month

- The Art of Computer Programming by Donald Knuth

- Compilers: Principles, Techniques and Tools by Alfred V. Aho, Ravi Sethi and Jeffrey D. Ullman

- Gödel, Escher, Bach by Douglas Hofstadter

- Clean Code: A Handbook of Agile Software Craftsmanship by Robert C. Martin

- Effective C++

- More Effective C++

- CODE by Charles Petzold

- Programming Pearls by Jon Bentley

- Working Effectively with Legacy Code by Michael C. Feathers

- Peopleware by Demarco and Lister

- Coders at Work by Peter Seibel

- Surely You're Joking, Mr. Feynman!

- Effective Java 2nd edition

- Patterns of Enterprise Application Architecture by Martin Fowler

- The Little Schemer

- The Seasoned Schemer

- Why's (Poignant) Guide to Ruby

- The Inmates Are Running The Asylum: Why High Tech Products Drive Us Crazy and How to Restore the Sanity

- The Art of Unix Programming

- Test-Driven Development: By Example by Kent Beck

- Practices of an Agile Developer

- Don't Make Me Think

- Agile Software Development, Principles, Patterns, and Practices by Robert C. Martin

- Domain Driven Designs by Eric Evans

- The Design of Everyday Things by Donald Norman

- Modern C++ Design by Andrei Alexandrescu

- Best Software Writing I by Joel Spolsky

- The Practice of Programming by Kernighan and Pike

- Pragmatic Thinking and Learning: Refactor Your Wetware by Andy Hunt

- Software Estimation: Demystifying the Black Art by Steve McConnel

- The Passionate Programmer (My Job Went To India) by Chad Fowler

- Hackers: Heroes of the Computer Revolution

- Algorithms + Data Structures = Programs

- Writing Solid Code

- JavaScript - The Good Parts

- Getting Real by 37 Signals

- Foundations of Programming by Karl Seguin

- Computer Graphics: Principles and Practice in C (2nd Edition)

- Thinking in Java by Bruce Eckel

- The Elements of Computing Systems

- Refactoring to Patterns by Joshua Kerievsky

- Modern Operating Systems by Andrew S. Tanenbaum

- The Annotated Turing

- Things That Make Us Smart by Donald Norman

- The Timeless Way of Building by Christopher Alexander

- The Deadline: A Novel About Project Management by Tom DeMarco

- The C++ Programming Language (3rd edition) by Stroustrup

- Patterns of Enterprise Application Architecture

- Computer Systems - A Programmer's Perspective

- Agile Principles, Patterns, and Practices in C# by Robert C. Martin

- Growing Object-Oriented Software, Guided by Tests

- Framework Design Guidelines by Brad Abrams

- Object Thinking by Dr. David West

- Advanced Programming in the UNIX Environment by W. Richard Stevens

- Hackers and Painters: Big Ideas from the Computer Age

- The Soul of a New Machine by Tracy Kidder

- CLR via C# by Jeffrey Richter

- The Timeless Way of Building by Christopher Alexander

- Design Patterns in C# by Steve Metsker

- Alice in Wonderland by Lewis Carol

- Zen and the Art of Motorcycle Maintenance by Robert M. Pirsig

- About Face - The Essentials of Interaction Design

- Here Comes Everybody: The Power of Organizing Without Organizations by Clay Shirky

- The Tao of Programming

- Computational Beauty of Nature

- Writing Solid Code by Steve Maguire

- Philip and Alex's Guide to Web Publishing

- Object-Oriented Analysis and Design with Applications by Grady Booch

- Effective Java by Joshua Bloch

- Computability by N. J. Cutland

- Masterminds of Programming

- The Tao Te Ching

- The Productive Programmer

- The Art of Deception by Kevin Mitnick

- The Career Programmer: Guerilla Tactics for an Imperfect World by Christopher Duncan

- Paradigms of Artificial Intelligence Programming: Case studies in Common Lisp

- Masters of Doom

- Pragmatic Unit Testing in C# with NUnit by Andy Hunt and Dave Thomas with Matt Hargett

- How To Solve It by George Polya

- The Alchemist by Paulo Coelho

- Smalltalk-80: The Language and its Implementation

- Writing Secure Code (2nd Edition) by Michael Howard

- Introduction to Functional Programming by Philip Wadler and Richard Bird

- No Bugs! by David Thielen

- Rework by Jason Freid and DHH

- JUnit in Action

#BlackOwned #BlackEntrepreneurs #BlackBuniness #AWSCertified #AWSCloudPractitioner #AWSCertification #AWSCLFC02 #CloudComputing #AWSStudyGuide #AWSTraining #AWSCareer #AWSExamPrep #AWSCommunity #AWSEducation #AWSBasics #AWSCertified #AWSMachineLearning #AWSCertification #AWSSpecialty #MachineLearning #AWSStudyGuide #CloudComputing #DataScience #AWSCertified #AWSSolutionsArchitect #AWSArchitectAssociate #AWSCertification #AWSStudyGuide #CloudComputing #AWSArchitecture #AWSTraining #AWSCareer #AWSExamPrep #AWSCommunity #AWSEducation #AzureFundamentals #AZ900 #MicrosoftAzure #ITCertification #CertificationPrep #StudyMaterials #TechLearning #MicrosoftCertified #AzureCertification #TechBooks

Top 1000 Canada Quiz and trivia: CANADA CITIZENSHIP TEST- HISTORY - GEOGRAPHY - GOVERNMENT- CULTURE - PEOPLE - LANGUAGES - TRAVEL - WILDLIFE - HOCKEY - TOURISM - SCENERIES - ARTS - DATA VISUALIZATION

Top 1000 Africa Quiz and trivia: HISTORY - GEOGRAPHY - WILDLIFE - CULTURE - PEOPLE - LANGUAGES - TRAVEL - TOURISM - SCENERIES - ARTS - DATA VISUALIZATION

Exploring the Pros and Cons of Visiting All Provinces and Territories in Canada.

Exploring the Advantages and Disadvantages of Visiting All 50 States in the USA

Health Health, a science-based community to discuss health news and the coronavirus (COVID-19) pandemic

- US infant mortality increased in 2022 for the first time in decades, CDC report showsby /u/cnn on July 25, 2024 at 6:37 pm

submitted by /u/cnn [link] [comments]

- Study raises hopes that shingles vaccine may delay onset of dementia | Dementia | The Guardianby /u/chilladipa on July 25, 2024 at 3:38 pm

submitted by /u/chilladipa [link] [comments]

- How fit is your city? New rankings by the American College of Sports Medicineby /u/idc2011 on July 25, 2024 at 3:35 pm

submitted by /u/idc2011 [link] [comments]

- Twice-Yearly Lenacapavir or Daily F/TAF for HIV Prevention in Cisgender Women | New England Journal of Medicineby /u/chilladipa on July 25, 2024 at 3:30 pm

submitted by /u/chilladipa [link] [comments]

- Biden Made a Healthy Decisionby /u/theatlantic on July 25, 2024 at 3:15 pm

submitted by /u/theatlantic [link] [comments]

Today I Learned (TIL) You learn something new every day; what did you learn today? Submit interesting and specific facts about something that you just found out here.

- TIL actor John Larroquette was the uncredited narrator of the prologue to the 1974 horror movie Texas Chainsaw Massacre. In lieu of cash, he was paid by the Director Tobe Hooper in Marijuana.by /u/openletter8 on July 25, 2024 at 6:56 pm

submitted by /u/openletter8 [link] [comments]

- TIL that the every Shakopee Mdewakanton Sioux indian receives a payout of around $1 million per year from casino profits.by /u/friendlystranger4u on July 25, 2024 at 6:22 pm

submitted by /u/friendlystranger4u [link] [comments]

- TIL Motorcycles in China are dictated by law to be decommissioned and destroyed in 13 years after registration regardless of the conditionsby /u/Easy_Piece_592 on July 25, 2024 at 5:56 pm

submitted by /u/Easy_Piece_592 [link] [comments]

- TIL a man named Jonathan Riches has filed more than 2,600 lawsuits since 2006. He even sued Guinness World Records to try to stop them from titling him as "the most litigious man in history".by /u/doopityWoop22 on July 25, 2024 at 5:03 pm

submitted by /u/doopityWoop22 [link] [comments]

- TIL that in 2018, an American half-pipe skier qualified for the Olympics despite minimal experience. Olympic requirements stated that an athlete needed to place in the top 30 at multiple events. She simply sought out events with fewer than 30 participants, showed up, and skied down without falling.by /u/ctdca on July 25, 2024 at 4:28 pm

submitted by /u/ctdca [link] [comments]

Reddit Science This community is a place to share and discuss new scientific research. Read about the latest advances in astronomy, biology, medicine, physics, social science, and more. Find and submit new publications and popular science coverage of current research.

- Abstinence-only sex education linked to higher pornography use among women | This finding adds to the ongoing conversation about the effectiveness and impacts of different sexuality education approaches.by /u/chrisdh79 on July 25, 2024 at 6:49 pm

submitted by /u/chrisdh79 [link] [comments]

- AlphaProof and AlphaGeometry 2 AI models achieve silver medal standard in solving International Mathematical Olympiad problemsby /u/Big_Profit9076 on July 25, 2024 at 5:59 pm

submitted by /u/Big_Profit9076 [link] [comments]

- Scientists have described a new species of chordate, Nuucichthys rhynchocephalus, the first soft-bodied vertebrate from the Drumian Marjum Formation of the American Great Basin.by /u/grimisgreedy on July 25, 2024 at 5:55 pm

submitted by /u/grimisgreedy [link] [comments]

- Secularists revealed as a unique political force in America, with an intriguing divergence from liberals. Unlike nonreligiosity, which denotes a lack of religious affiliation or belief, secularism involves an active identification with principles grounded in empirical evidence and rational thought.by /u/mvea on July 25, 2024 at 5:40 pm

submitted by /u/mvea [link] [comments]

- New shingles vaccine could reduce risk of dementia. The study found at least a 17% reduction in dementia diagnoses in the six years after the new recombinant shingles vaccination, equating to 164 or more additional days lived without dementia.by /u/Wagamaga on July 25, 2024 at 4:48 pm

submitted by /u/Wagamaga [link] [comments]

Reddit Sports Sports News and Highlights from the NFL, NBA, NHL, MLB, MLS, and leagues around the world.

- A's place their lone all-star, Mason Miller, on IL with fractured finger after hitting training tableby /u/Oldtimer_2 on July 25, 2024 at 8:15 pm

submitted by /u/Oldtimer_2 [link] [comments]

- Flyers sign All-Star Travis Konecny to an 8-year extension worth $70 millionby /u/Oldtimer_2 on July 25, 2024 at 7:45 pm

submitted by /u/Oldtimer_2 [link] [comments]

- Bills’ Von Miller says he believes domestic assault case to be closed, with no charges filedby /u/Oldtimer_2 on July 25, 2024 at 7:43 pm

submitted by /u/Oldtimer_2 [link] [comments]

- Padres' Dylan Cease throws no-hitter vs. Nationalsby /u/Oldtimer_2 on July 25, 2024 at 7:41 pm

submitted by /u/Oldtimer_2 [link] [comments]

- Appeal denied in Valieva case; U.S. skaters to get gold in Parisby /u/PrincessBananas85 on July 25, 2024 at 6:18 pm

submitted by /u/PrincessBananas85 [link] [comments]

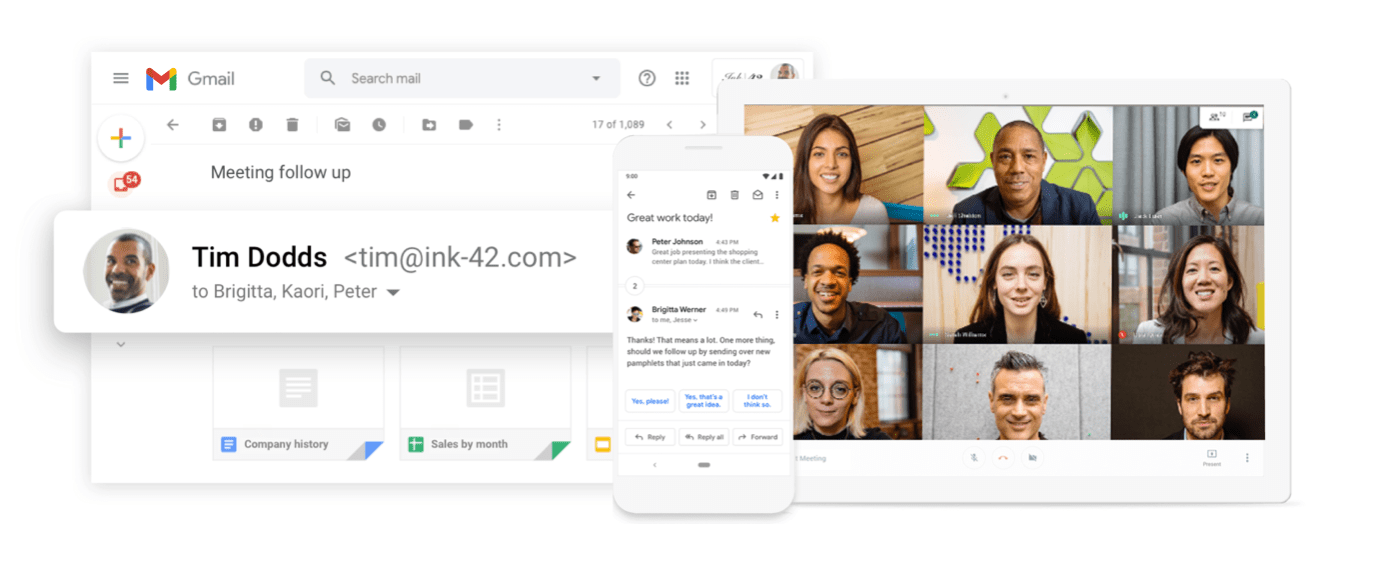

Turn your dream into reality with Google Workspace: It’s free for the first 14 days.

Get 20% off Google Google Workspace (Google Meet) Standard Plan with the following codes:

96DRHDRA9J7GTN6

96DRHDRA9J7GTN6

63F733CLLY7R7MM

63F7D7CPD9XXUVT

63FLKQHWV3AEEE6

63JGLWWK36CP7WM

63KKR9EULQRR7VE

63KNY4N7VHCUA9R

63LDXXFYU6VXDG9

63MGNRCKXURAYWC

63NGNDVVXJP4N99

63P4G3ELRPADKQU

With Google Workspace, Get custom email @yourcompany, Work from anywhere; Easily scale up or down

Google gives you the tools you need to run your business like a pro. Set up custom email, share files securely online, video chat from any device, and more.

Google Workspace provides a platform, a common ground, for all our internal teams and operations to collaboratively support our primary business goal, which is to deliver quality information to our readers quickly.

Get 20% off Google Workspace (Google Meet) Business Plan (AMERICAS): M9HNXHX3WC9H7YE

C37HCAQRVR7JTFK

C3AE76E7WATCTL9

C3C3RGUF9VW6LXE

C3D9LD4L736CALC

C3EQXV674DQ6PXP

C3G9M3JEHXM3XC7

C3GGR3H4TRHUD7L

C3LVUVC3LHKUEQK

C3PVGM4CHHPMWLE

C3QHQ763LWGTW4C

Even if you’re small, you want people to see you as a professional business. If you’re still growing, you need the building blocks to get you where you want to be. I’ve learned so much about business through Google Workspace—I can’t imagine working without it. (Email us for more codes)