What are the Top 100 AWS Solutions Architect Associate Certification Exam Questions and Answers Dump SAA-C03?

AWS Certified Solutions Architects are responsible for designing, deploying, and managing AWS cloud applications. The AWS Cloud Solutions Architect Associate exam validates an examinee’s ability to effectively demonstrate knowledge of how to design and deploy secure and robust applications on AWS technologies. The AWS Solutions Architect Associate training provides an overview of key AWS services, security, architecture, pricing, and support.

An Insightful Overview of SAA-C03 Exam Topics Encountered and Reflecting on My SAA-C03 Exam Journey: From Setback to Success

The AWS Certified Solutions Architect – Associate (SAA-C03) Examination is a required examination for the AWS Certified Solutions Architect – Professional level. Successful completion of this examination can lead to a salary raise or promotion for those in cloud roles. Below is the Top 100 AWS solutions architect associate exam prep facts and summaries questions and answers dump.

With average increases in salary of over 25% for certified individuals, you’re going to be in a much better position to secure your dream job or promotion if you earn your AWS Certified Solutions Architect Associate certification. You’ll also develop strong hands-on skills by doing the guided hands-on lab exercises in our course which will set you up for successfully performing in a solutions architect role.

AWS solutions architect associate SAA-C03 practice exam and cheat sheet 2023 pdf eBook Print Book

aws solutions architect associate SAA-C03 practice exam and flashcards 2023 pdf eBook Print Book

aws certified solutions architect pdf book 2023

aws solutions architect cheat sheet ebook 2023

The AWS Solutions Architect Associate is ideal for those performing in Solutions Architect roles and for anyone working at a technical level with AWS technologies. Earning the AWS Certified Solutions Architect Associate will build your credibility and confidence as it demonstrates that you have the cloud skills companies need to innovate for the future.

AWS Certified Solutions Architect – Associate average salary

The AWS Certified Solutions Architect – Associate average salary is $149,446/year

In this blog, we will help you prepare for the AWS Solution Architect Associate Certification Exam, give you some facts and summaries, provide AWS Solution Architect Associate Top Questions and Answers Dump

How long to study for the AWS Solutions Architect exam?

We recommend that you allocate at least 60 minutes of study time per day and you will then be able to complete the certification within 5 weeks (including taking the actual exam). Study times can vary based on your experience with AWS and how much time you have each day, with some students passing their exams much faster and others taking a little longer. Get our eBook here.

AWS Certified Solutions Architects are IT professionals who design cloud solutions with AWS services to meet given technical requirements. An AWS Solutions Architect Associate is expected to design and implement distributed systems on AWS that are high-performing, scalable, secure and cost optimized.

How hard is the AWS Certified Solutions Architect Associate exam?

The AWS Solutions Architect Associate exam is an associate-level exam that requires a solid understanding of the AWS platform and a broad range of AWS services. The AWS Certified Solutions Architect Associate exam questions are scenario-based questions and can be challenging. Despite this, the AWS Solutions Architect Associate is often earned by beginners to cloud computing.

The popular AWS Certified Solutions Architect Associate exam have its new version this August 2022.

AWS Certified Solutions Architect – Associate (SAA-C03) Exam Guide

The AWS Certified Solutions Architect – Associate (SAA-C03) exam is intended for individuals who perform in a solutions architect role.

The exam validates a candidate’s ability to use AWS technologies to design solutions based on the AWS Well-Architected Framework.

What is the format of the AWS Certified Solutions Architect Associate exam?

The SAA-C03 exam is a multiple choice examination that is 65 questions in length. You can take the exam in a testing center or using an online proctored exam from your home or office. You have 130 minutes to complete your exam and the passing mark is 720 points out of 100 points (72%). If English is not your first language you can request an accommodation when booking your exam that will qualify you for an additional 30 minutes exam extension.

The exam also validates a candidate’s ability to complete the following tasks:

• Design solutions that incorporate AWS services to meet current business requirements and future projected needs

• Design architectures that are secure, resilient, high-performing, and cost-optimized

• Review existing solutions and determine improvements

Unscored content

The exam includes 15 unscored questions that do not affect your score.

AWS collects information about candidate performance on these unscored questions to evaluate these questions for future use as scored questions. These unscored questions are not identified on the exam.

Target candidate description

The target candidate should have at least 1 year of hands-on experience designing cloud solutions that use AWS services

Your results for the exam are reported as a scaled score of 100–1,000. The minimum passing score is 720.

Your score shows how you performed on the exam as a whole and whether or not you passed. Scaled scoring models help equate scores across multiple exam forms that might have slightly different difficulty levels.

What is the passing score for the AWS Solutions Architect exam?

All AWS certification exam results are reported as a score from 100 to 1000. Your score shows how you performed on the examination as a whole and whether or not you passed. The passing score for the AWS Certified Solutions Architect Associate is 720 (72%).

Can I take the AWS Exam from Home?

Yes, you can now take all AWS Certification exams with online proctoring using Pearson Vue or PSI. Here’s a detailed guide on how to book your AWS exam.

Are there any prerequisites for taking the AWS Certified Solutions Architect exam?

There are no prerequisites for taking AWS exams. You do not need any programming knowledge or experience working with AWS. Everything you need to know is included in our courses. We do recommend that you have a basic understanding of fundamental computing concepts such as compute, storage, networking, and databases.

How much does the AWS Solution Architect Exam cost?

The AWS Solutions Architect Associate exam cost is $150 US.

Once you successfully pass your exam, you will be issued a 50% discount voucher that you can use towards your next AWS Exam.

For more detailed information, check out this blog article on AWS Certification Costs.

The Role of an AWS Certified Solutions Architect Associate

AWS Certified Solutions Architects are IT professionals who design cloud solutions with AWS services to meet given technical requirements. An AWS Solutions Architect Associate is expected to design and implement distributed systems on AWS that are high-performing, scalable, secure and cost optimized.

Content outline:

Domain 1: Design Secure Architectures 30%

Domain 2: Design Resilient Architectures 26%

Domain 3: Design High-Performing Architectures 24%

Domain 4: Design Cost-Optimized Architectures 20%

Domain 1: Design Secure Architectures

This exam domain is focused on securing your architectures on AWS and comprises 30% of the exam. Task statements include:

Task Statement 1: Design secure access to AWS resources.

Knowledge of:

• Access controls and management across multiple accounts

• AWS federated access and identity services (for example, AWS Identity and Access Management [IAM], AWS Single Sign-On [AWS SSO])

• AWS global infrastructure (for example, Availability Zones, AWS Regions)

• AWS security best practices (for example, the principle of least privilege)

• The AWS shared responsibility model

Skills in:

• Applying AWS security best practices to IAM users and root users (for example, multi-factor authentication [MFA])

• Designing a flexible authorization model that includes IAM users, groups, roles, and policies

• Designing a role-based access control strategy (for example, AWS Security Token Service [AWS STS], role switching, cross-account access)

• Designing a security strategy for multiple AWS accounts (for example, AWS Control Tower, service control policies [SCPs])

• Determining the appropriate use of resource policies for AWS services

• Determining when to federate a directory service with IAM roles

Task Statement 2: Design secure workloads and applications.

Knowledge of:

• Application configuration and credentials security

• AWS service endpoints

• Control ports, protocols, and network traffic on AWS

• Secure application access

• Security services with appropriate use cases (for example, Amazon Cognito, Amazon GuardDuty, Amazon Macie)

• Threat vectors external to AWS (for example, DDoS, SQL injection)

Skills in:

• Designing VPC architectures with security components (for example, security groups, route tables, network ACLs, NAT gateways)

• Determining network segmentation strategies (for example, using public subnets and private subnets)

• Integrating AWS services to secure applications (for example, AWS Shield, AWS WAF, AWS SSO, AWS Secrets Manager)

• Securing external network connections to and from the AWS Cloud (for example, VPN, AWS Direct Connect)

Task Statement 3: Determine appropriate data security controls.

Knowledge of:

• Data access and governance

• Data recovery

• Data retention and classification

• Encryption and appropriate key management

Skills in:

• Aligning AWS technologies to meet compliance requirements

• Encrypting data at rest (for example, AWS Key Management Service [AWS KMS])

• Encrypting data in transit (for example, AWS Certificate Manager [ACM] using TLS)

• Implementing access policies for encryption keys

• Implementing data backups and replications

• Implementing policies for data access, lifecycle, and protection

• Rotating encryption keys and renewing certificates

Domain 2: Design Resilient Architectures

This exam domain is focused on designing resilient architectures on AWS and comprises 26% of the exam. Task statements include:

Task Statement 1: Design scalable and loosely coupled architectures.

Knowledge of:

• API creation and management (for example, Amazon API Gateway, REST API)

• AWS managed services with appropriate use cases (for example, AWS Transfer Family, Amazon

Simple Queue Service [Amazon SQS], Secrets Manager)

• Caching strategies

• Design principles for microservices (for example, stateless workloads compared with stateful workloads)

• Event-driven architectures

• Horizontal scaling and vertical scaling

• How to appropriately use edge accelerators (for example, content delivery network [CDN])

• How to migrate applications into containers

• Load balancing concepts (for example, Application Load Balancer)

• Multi-tier architectures

• Queuing and messaging concepts (for example, publish/subscribe)

• Serverless technologies and patterns (for example, AWS Fargate, AWS Lambda)

• Storage types with associated characteristics (for example, object, file, block)

• The orchestration of containers (for example, Amazon Elastic Container Service [Amazon ECS],Amazon Elastic Kubernetes Service [Amazon EKS])

• When to use read replicas

• Workflow orchestration (for example, AWS Step Functions)

Skills in:

• Designing event-driven, microservice, and/or multi-tier architectures based on requirements

• Determining scaling strategies for components used in an architecture design

• Determining the AWS services required to achieve loose coupling based on requirements

• Determining when to use containers

• Determining when to use serverless technologies and patterns

• Recommending appropriate compute, storage, networking, and database technologies based on requirements

• Using purpose-built AWS services for workloads

Task Statement 2: Design highly available and/or fault-tolerant architectures.

Knowledge of:

• AWS global infrastructure (for example, Availability Zones, AWS Regions, Amazon Route 53)

• AWS managed services with appropriate use cases (for example, Amazon Comprehend, Amazon Polly)

• Basic networking concepts (for example, route tables)

• Disaster recovery (DR) strategies (for example, backup and restore, pilot light, warm standby,

active-active failover, recovery point objective [RPO], recovery time objective [RTO])

• Distributed design patterns

• Failover strategies

• Immutable infrastructure

• Load balancing concepts (for example, Application Load Balancer)

• Proxy concepts (for example, Amazon RDS Proxy)

• Service quotas and throttling (for example, how to configure the service quotas for a workload in a standby environment)

• Storage options and characteristics (for example, durability, replication)

• Workload visibility (for example, AWS X-Ray)

Skills in:

• Determining automation strategies to ensure infrastructure integrity

• Determining the AWS services required to provide a highly available and/or fault-tolerant architecture across AWS Regions or Availability Zones

• Identifying metrics based on business requirements to deliver a highly available solution

• Implementing designs to mitigate single points of failure

• Implementing strategies to ensure the durability and availability of data (for example, backups)

• Selecting an appropriate DR strategy to meet business requirements

• Using AWS services that improve the reliability of legacy applications and applications not built for the cloud (for example, when application changes are not possible)

• Using purpose-built AWS services for workloads

Domain 3: Design High-Performing Architectures

This exam domain is focused on designing high-performing architectures on AWS and comprises 24% of the exam. Task statements include:

Task Statement 1: Determine high-performing and/or scalable storage solutions.

Knowledge of:

• Hybrid storage solutions to meet business requirements

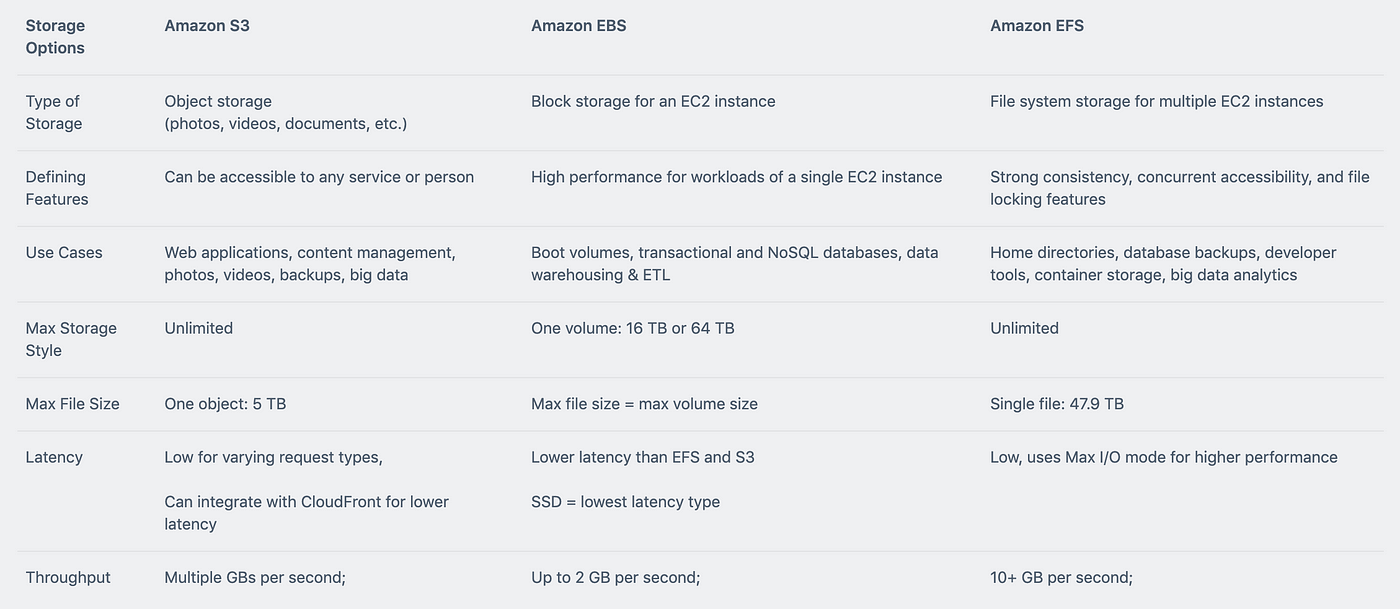

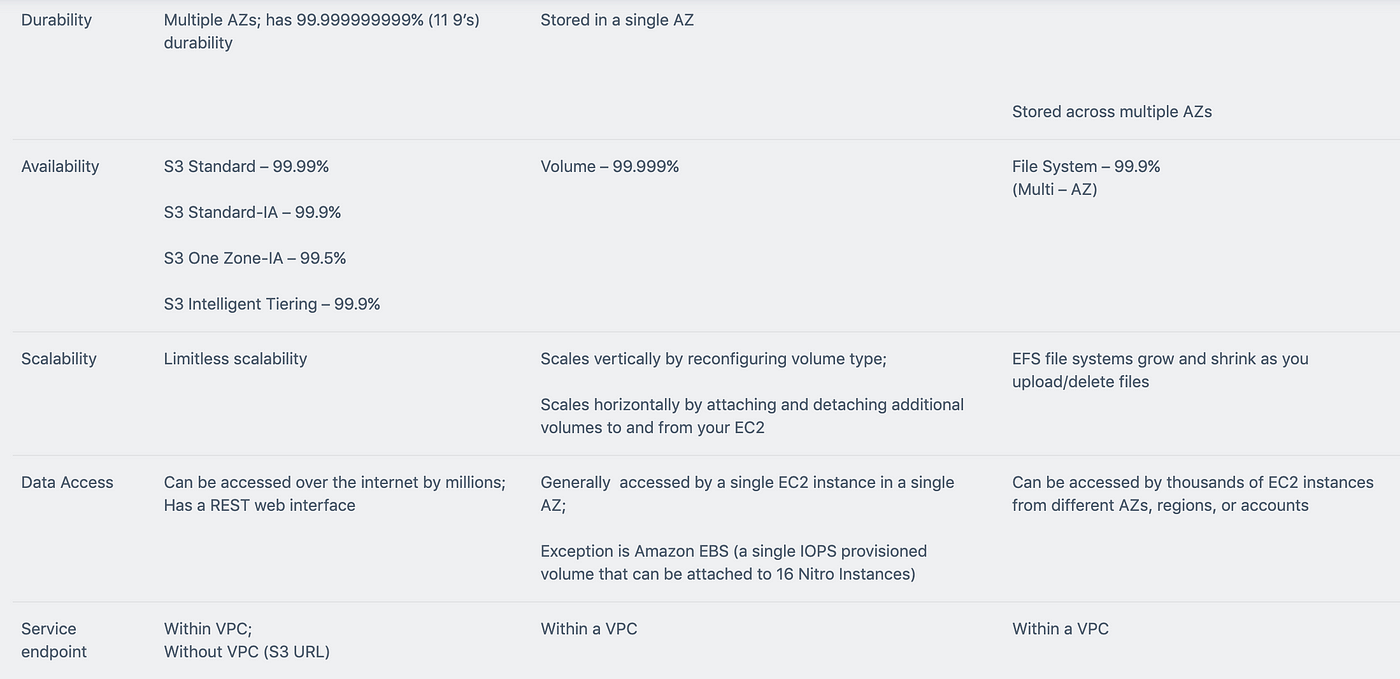

• Storage services with appropriate use cases (for example, Amazon S3, Amazon Elastic File System [Amazon EFS], Amazon Elastic Block Store [Amazon EBS])

• Storage types with associated characteristics (for example, object, file, block)

Skills in:

• Determining storage services and configurations that meet performance demands

• Determining storage services that can scale to accommodate future needs

Task Statement 2: Design high-performing and elastic compute solutions.

Knowledge of:

• AWS compute services with appropriate use cases (for example, AWS Batch, Amazon EMR, Fargate)

• Distributed computing concepts supported by AWS global infrastructure and edge services

• Queuing and messaging concepts (for example, publish/subscribe)

• Scalability capabilities with appropriate use cases (for example, Amazon EC2 Auto Scaling, AWS Auto Scaling)

• Serverless technologies and patterns (for example, Lambda, Fargate)

• The orchestration of containers (for example, Amazon ECS, Amazon EKS)

Skills in:

• Decoupling workloads so that components can scale independently

• Identifying metrics and conditions to perform scaling actions

• Selecting the appropriate compute options and features (for example, EC2 instance types) to meet business requirements

• Selecting the appropriate resource type and size (for example, the amount of Lambda memory) to meet business requirements

Task Statement 3: Determine high-performing database solutions.

Knowledge of:

• AWS global infrastructure (for example, Availability Zones, AWS Regions)

• Caching strategies and services (for example, Amazon ElastiCache)

• Data access patterns (for example, read-intensive compared with write-intensive)

• Database capacity planning (for example, capacity units, instance types, Provisioned IOPS)

• Database connections and proxies

• Database engines with appropriate use cases (for example, heterogeneous migrations, homogeneous migrations)

• Database replication (for example, read replicas)

• Database types and services (for example, serverless, relational compared with non-relational, in-memory)

Skills in:

• Configuring read replicas to meet business requirements

• Designing database architectures

• Determining an appropriate database engine (for example, MySQL compared with

PostgreSQL)

• Determining an appropriate database type (for example, Amazon Aurora, Amazon DynamoDB)

• Integrating caching to meet business requirements

Task Statement 4: Determine high-performing and/or scalable network architectures.

Knowledge of:

• Edge networking services with appropriate use cases (for example, Amazon CloudFront, AWS Global Accelerator)

• How to design network architecture (for example, subnet tiers, routing, IP addressing)

• Load balancing concepts (for example, Application Load Balancer)

• Network connection options (for example, AWS VPN, Direct Connect, AWS PrivateLink)

Skills in:

• Creating a network topology for various architectures (for example, global, hybrid, multi-tier)

• Determining network configurations that can scale to accommodate future needs

• Determining the appropriate placement of resources to meet business requirements

• Selecting the appropriate load balancing strategy

Task Statement 5: Determine high-performing data ingestion and transformation solutions.

Knowledge of:

• Data analytics and visualization services with appropriate use cases (for example, Amazon Athena, AWS Lake Formation, Amazon QuickSight)

• Data ingestion patterns (for example, frequency)

• Data transfer services with appropriate use cases (for example, AWS DataSync, AWS Storage Gateway)

• Data transformation services with appropriate use cases (for example, AWS Glue)

• Secure access to ingestion access points

• Sizes and speeds needed to meet business requirements

• Streaming data services with appropriate use cases (for example, Amazon Kinesis)

Skills in:

• Building and securing data lakes

• Designing data streaming architectures

• Designing data transfer solutions

• Implementing visualization strategies

• Selecting appropriate compute options for data processing (for example, Amazon EMR)

• Selecting appropriate configurations for ingestion

• Transforming data between formats (for example, .csv to .parquet)

Domain 4: Design Cost-Optimized Architectures

This exam domain is focused optimizing solutions for cost-effectiveness on AWS and comprises 20% of the exam. Task statements include:

Task Statement 1: Design cost-optimized storage solutions.

Knowledge of:

• Access options (for example, an S3 bucket with Requester Pays object storage)

• AWS cost management service features (for example, cost allocation tags, multi-account billing)

• AWS cost management tools with appropriate use cases (for example, AWS Cost Explorer, AWS Budgets, AWS Cost and Usage Report)

• AWS storage services with appropriate use cases (for example, Amazon FSx, Amazon EFS, Amazon S3, Amazon EBS)

• Backup strategies

• Block storage options (for example, hard disk drive [HDD] volume types, solid state drive [SSD] volume types)

• Data lifecycles

• Hybrid storage options (for example, DataSync, Transfer Family, Storage Gateway)

• Storage access patterns

• Storage tiering (for example, cold tiering for object storage)

• Storage types with associated characteristics (for example, object, file, block)

Skills in:

• Designing appropriate storage strategies (for example, batch uploads to Amazon S3 compared with individual uploads)

• Determining the correct storage size for a workload

• Determining the lowest cost method of transferring data for a workload to AWS storage

• Determining when storage auto scaling is required

• Managing S3 object lifecycles

• Selecting the appropriate backup and/or archival solution

• Selecting the appropriate service for data migration to storage services

• Selecting the appropriate storage tier

• Selecting the correct data lifecycle for storage

• Selecting the most cost-effective storage service for a workload

Task Statement 2: Design cost-optimized compute solutions.

Knowledge of:

• AWS cost management service features (for example, cost allocation tags, multi-account billing)

• AWS cost management tools with appropriate use cases (for example, Cost Explorer, AWS Budgets, AWS Cost and Usage Report)

• AWS global infrastructure (for example, Availability Zones, AWS Regions)

• AWS purchasing options (for example, Spot Instances, Reserved Instances, Savings Plans)

• Distributed compute strategies (for example, edge processing)

• Hybrid compute options (for example, AWS Outposts, AWS Snowball Edge)

• Instance types, families, and sizes (for example, memory optimized, compute optimized, virtualization)

• Optimization of compute utilization (for example, containers, serverless computing, microservices)

• Scaling strategies (for example, auto scaling, hibernation)

Skills in:

• Determining an appropriate load balancing strategy (for example, Application Load Balancer [Layer 7] compared with Network Load Balancer [Layer 4] compared with Gateway Load Balancer)

• Determining appropriate scaling methods and strategies for elastic workloads (for example, horizontal compared with vertical, EC2 hibernation)

• Determining cost-effective AWS compute services with appropriate use cases (for example, Lambda, Amazon EC2, Fargate)

• Determining the required availability for different classes of workloads (for example, production workloads, non-production workloads)

• Selecting the appropriate instance family for a workload

• Selecting the appropriate instance size for a workload

Task Statement 3: Design cost-optimized database solutions.

Knowledge of:

• AWS cost management service features (for example, cost allocation tags, multi-account billing)

• AWS cost management tools with appropriate use cases (for example, Cost Explorer, AWS Budgets, AWS Cost and Usage Report)

• Caching strategies

• Data retention policies

• Database capacity planning (for example, capacity units)

• Database connections and proxies

• Database engines with appropriate use cases (for example, heterogeneous migrations, homogeneous migrations)

• Database replication (for example, read replicas)

• Database types and services (for example, relational compared with non-relational, Aurora, DynamoDB)

Skills in:

• Designing appropriate backup and retention policies (for example, snapshot frequency)

• Determining an appropriate database engine (for example, MySQL compared with PostgreSQL)

• Determining cost-effective AWS database services with appropriate use cases (for example, DynamoDB compared with Amazon RDS, serverless)

• Determining cost-effective AWS database types (for example, time series format, columnar format)

• Migrating database schemas and data to different locations and/or different database engines

Task Statement 4: Design cost-optimized network architectures.

Knowledge of:

• AWS cost management service features (for example, cost allocation tags, multi-account billing)

• AWS cost management tools with appropriate use cases (for example, Cost Explorer, AWS Budgets, AWS Cost and Usage Report)

• Load balancing concepts (for example, Application Load Balancer)

• NAT gateways (for example, NAT instance costs compared with NAT gateway costs)

• Network connectivity (for example, private lines, dedicated lines, VPNs)

• Network routing, topology, and peering (for example, AWS Transit Gateway, VPC peering)

• Network services with appropriate use cases (for example, DNS)

Skills in:

• Configuring appropriate NAT gateway types for a network (for example, a single shared NAT

gateway compared with NAT gateways for each Availability Zone)

• Configuring appropriate network connections (for example, Direct Connect compared with VPN compared with internet)

• Configuring appropriate network routes to minimize network transfer costs (for example, Region to Region, Availability Zone to Availability Zone, private to public, Global Accelerator, VPC endpoints)

• Determining strategic needs for content delivery networks (CDNs) and edge caching

• Reviewing existing workloads for network optimizations

• Selecting an appropriate throttling strategy

• Selecting the appropriate bandwidth allocation for a network device (for example, a single VPN compared with multiple VPNs, Direct Connect speed)

Which key tools, technologies, and concepts might be covered on the exam?

The following is a non-exhaustive list of the tools and technologies that could appear on the exam.

This list is subject to change and is provided to help you understand the general scope of services, features, or technologies on the exam.

The general tools and technologies in this list appear in no particular order.

AWS services are grouped according to their primary functions. While some of these technologies will likely be covered more than others on the exam, the order and placement of them in this list is no indication of relative weight or importance:

• Compute

• Cost management

• Database

• Disaster recovery

• High performance

• Management and governance

• Microservices and component decoupling

• Migration and data transfer

• Networking, connectivity, and content delivery

• Resiliency

• Security

• Serverless and event-driven design principles

• Storage

AWS Services and Features

There are lots of new services and feature updates in scope for the new AWS Certified Solutions Architect Associate certification! Here’s a list of some of the new services that will be in scope for the new version of the exam:

Analytics:

• Amazon Athena

• AWS Data Exchange

• AWS Data Pipeline

• Amazon EMR

• AWS Glue

• Amazon Kinesis

• AWS Lake Formation

• Amazon Managed Streaming for Apache Kafka (Amazon MSK)

• Amazon OpenSearch Service (Amazon Elasticsearch Service)

• Amazon QuickSight

• Amazon Redshift

Application Integration:

• Amazon AppFlow

• AWS AppSync

• Amazon EventBridge (Amazon CloudWatch Events)

• Amazon MQ

• Amazon Simple Notification Service (Amazon SNS)

• Amazon Simple Queue Service (Amazon SQS)

• AWS Step Functions

AWS Cost Management:

• AWS Budgets

• AWS Cost and Usage Report

• AWS Cost Explorer

• Savings Plans

Compute:

• AWS Batch

• Amazon EC2

• Amazon EC2 Auto Scaling

• AWS Elastic Beanstalk

• AWS Outposts

• AWS Serverless Application Repository

• VMware Cloud on AWS

• AWS Wavelength

Containers:

• Amazon Elastic Container Registry (Amazon ECR)

• Amazon Elastic Container Service (Amazon ECS)

• Amazon ECS Anywhere

• Amazon Elastic Kubernetes Service (Amazon EKS)

• Amazon EKS Anywhere

• Amazon EKS Distro

Database:

• Amazon Aurora

• Amazon Aurora Serverless

• Amazon DocumentDB (with MongoDB compatibility)

• Amazon DynamoDB

• Amazon ElastiCache

• Amazon Keyspaces (for Apache Cassandra)

• Amazon Neptune

• Amazon Quantum Ledger Database (Amazon QLDB)

• Amazon RDS

• Amazon Redshift

• Amazon Timestream

Developer Tools:

• AWS X-Ray

Front-End Web and Mobile:

• AWS Amplify

• Amazon API Gateway

• AWS Device Farm

• Amazon Pinpoint

Machine Learning:

• Amazon Comprehend

• Amazon Forecast

• Amazon Fraud Detector

• Amazon Kendra

• Amazon Lex

• Amazon Polly

• Amazon Rekognition

• Amazon SageMaker

• Amazon Textract

• Amazon Transcribe

• Amazon Translate

Management and Governance:

• AWS Auto Scaling

• AWS CloudFormation

• AWS CloudTrail

• Amazon CloudWatch

• AWS Command Line Interface (AWS CLI)

• AWS Compute Optimizer

• AWS Config

• AWS Control Tower

• AWS License Manager

• Amazon Managed Grafana

• Amazon Managed Service for Prometheus

• AWS Management Console

• AWS Organizations

• AWS Personal Health Dashboard

• AWS Proton

• AWS Service Catalog

• AWS Systems Manager

• AWS Trusted Advisor

• AWS Well-Architected Tool

Media Services:

• Amazon Elastic Transcoder

• Amazon Kinesis Video Streams

Migration and Transfer:

• AWS Application Discovery Service

• AWS Application Migration Service (CloudEndure Migration)

• AWS Database Migration Service (AWS DMS)

• AWS DataSync

• AWS Migration Hub

• AWS Server Migration Service (AWS SMS)

• AWS Snow Family

• AWS Transfer Family

Networking and Content Delivery:

• Amazon CloudFront

• AWS Direct Connect

• Elastic Load Balancing (ELB)

• AWS Global Accelerator

• AWS PrivateLink

• Amazon Route 53

• AWS Transit Gateway

• Amazon VPC

• AWS VPN

Security, Identity, and Compliance:

• AWS Artifact

• AWS Audit Manager

• AWS Certificate Manager (ACM)

• AWS CloudHSM

• Amazon Cognito

• Amazon Detective

• AWS Directory Service

• AWS Firewall Manager

• Amazon GuardDuty

• AWS Identity and Access Management (IAM)

• Amazon Inspector

• AWS Key Management Service (AWS KMS)

• Amazon Macie

• AWS Network Firewall

• AWS Resource Access Manager (AWS RAM)

• AWS Secrets Manager

• AWS Security Hub

• AWS Shield

• AWS Single Sign-On

• AWS WAF

Serverless:

• AWS AppSync

• AWS Fargate

• AWS Lambda

Storage:

• AWS Backup

• Amazon Elastic Block Store (Amazon EBS)

• Amazon Elastic File System (Amazon EFS)

• Amazon FSx (for all types)

• Amazon S3

• Amazon S3 Glacier

• AWS Storage Gateway

Out-of-scope AWS services and features

The following is a non-exhaustive list of AWS services and features that are not covered on the exam.

These services and features do not represent every AWS offering that is excluded from the exam content.

Analytics:

• Amazon CloudSearch

Application Integration:

• Amazon Managed Workflows for Apache Airflow (Amazon MWAA)

AR and VR:

• Amazon Sumerian

Blockchain:

• Amazon Managed Blockchain

Compute:

• Amazon Lightsail

Database:

• Amazon RDS on VMware

Developer Tools:

• AWS Cloud9

• AWS Cloud Development Kit (AWS CDK)

• AWS CloudShell

• AWS CodeArtifact

• AWS CodeBuild

• AWS CodeCommit

• AWS CodeDeploy

• Amazon CodeGuru

• AWS CodeStar

• Amazon Corretto

• AWS Fault Injection Simulator (AWS FIS)

• AWS Tools and SDKs

Front-End Web and Mobile:

• Amazon Location Service

Game Tech:

• Amazon GameLift

• Amazon Lumberyard

Internet of Things:

• All services

Which new AWS services will be covered in the SAA-C03?

AWS Data Exchange,

AWS Data Pipeline,

AWS Lake Formation,

Amazon Managed Streaming for Apache Kafka,

Amazon AppFlow,

AWS Outposts,

VMware Cloud on AWS,

AWS Wavelength,

Amazon Neptune,

Amazon Quantum Ledger Database,

Amazon Timestream,

AWS Amplify,

Amazon Comprehend,

Amazon Forecast,

Amazon Fraud Detector,

Amazon Kendra,

AWS License Manager,

Amazon Managed Grafana,

Amazon Managed Service for Prometheus,

AWS Proton,

Amazon Elastic Transcoder,

Amazon Kinesis Video Streams,

AWS Application Discovery Service,

AWS WAF Serverless,

AWS AppSync,

Get the AWS SAA-C03 Exam Prep App on: iOS – Android – Windows 10/11

AWS solutions architect associate exam prep facts and summaries questions and answers dump – Solution Architecture Definition 1:

Solution architecture is a practice of defining and describing an architecture of a system delivered in context of a specific solution and as such it may encompass description of an entire system or only its specific parts. Definition of a solution architecture is typically led by a solution architect.

AWS solutions architect associate exam prep facts and summaries questions and answers dump – Solution Architecture Definition 2:

The AWS Certified Solutions Architect – Associate examination is intended for individuals who perform a solutions architect role and have one or more years of hands-on experience designing available, cost-efficient, fault-tolerant, and scalable distributed systems on AWS.

AWS solutions architect associate exam prep facts and summaries questions and answers dump – AWS Solution Architect Associate Exam Facts and Summaries (SAA-C03)

- Take an AWS Training Class

- Study AWS Whitepapers and FAQs: AWS Well-Architected webpage (various whitepapers linked)

- If you are running an application in a production environment and must add a new EBS volume with data from a snapshot, what could you do to avoid degraded performance during the volume’s first use?

Initialize the data by reading each storage block on the volume.

Volumes created from an EBS snapshot must be initialized. Initializing occurs the first time a storage block on the volume is read, and the performance impact can be impacted by up to 50%. You can avoid this impact in production environments by pre-warming the volume by reading all of the blocks. - If you are running a legacy application that has hard-coded static IP addresses and it is running on an EC2 instance; what is the best failover solution that allows you to keep the same IP address on a new instance?

Elastic IP addresses (EIPs) are designed to be attached/detached and moved from one EC2 instance to another. They are a great solution for keeping a static IP address and moving it to a new instance if the current instance fails. This will reduce or eliminate any downtime uses may experience. - Which feature of Intel processors help to encrypt data without significant impact on performance?

AES-NI - You can mount to EFS from which two of the following?

- On-prem servers running Linux

- EC2 instances running Linux

EFS is not compatible with Windows operating systems.

When a file(s) is encrypted and the stored data is not in transit it’s known as encryption at rest. What is an example of encryption at rest?

When would vertical scaling be necessary? When an application is built entirely into one source code, otherwise known as a monolithic application.

Fault-Tolerance allows for continuous operation throughout a failure, which can lead to a low Recovery Time Objective. RPO vs RTO

- High-Availability means automating tasks so that an instance will quickly recover, which can lead to a low Recovery Time Objective. RPO vs. RTO

- Frequent backups reduce the time between the last backup and recovery point, otherwise known as the Recovery Point Objective. RPO vs. RTO

- Which represents the difference between Fault-Tolerance and High-Availability? High-Availability means the system will quickly recover from a failure event, and Fault-Tolerance means the system will maintain operations during a failure.

- From a security perspective, what is a principal? An anonymous user falls under the definition of a principal. A principal can be an anonymous user acting on a system.

An authenticated user falls under the definition of a principal. A principal can be an authenticated user acting on a system.

- What are two types of session data saving for an Application Session State? Stateless and Stateful

23. It is the customer’s responsibility to patch the operating system on an EC2 instance.

24. In designing an environment, what four main points should a Solutions Architect keep in mind? Cost-efficient, secure, application session state, undifferentiated heavy lifting: These four main points should be the framework when designing an environment.

25. In the context of disaster recovery, what does RPO stand for? RPO is the abbreviation for Recovery Point Objective.

26. What are the benefits of horizontal scaling?

Vertical scaling can be costly while horizontal scaling is cheaper.

Horizontal scaling suffers from none of the size limitations of vertical scaling.

Having horizontal scaling means you can easily route traffic to another instance of a server.

Top

Reference: AWS Solution Architect Associate Exam Prep

Top 100 AWS solutions architect associate exam prep facts and summaries questions and answers dump – SAA-C03

For a better mobile experience, download the mobile app below:

Top AWS solutions architect associate exam prep facts and summaries questions and answers dump – Quizzes

A company is developing a highly available web application using stateless web servers. Which services are suitable for storing session state data? (Select TWO.)

- A. CloudWatch

- B. DynamoDB

- C. Elastic Load Balancing

- D. ElastiCache

- E. Storage Gateway

Q1: A Solutions Architect is designing a critical business application with a relational database that runs on an EC2 instance. It requires a single EBS volume that can support up to 16,000 IOPS.

Which Amazon EBS volume type can meet the performance requirements of this application?

- A. EBS Provisioned IOPS SSD

- B. EBS Throughput Optimized HDD

- C. EBS General Purpose SSD

- D. EBS Cold HDD

Q2: An application running on EC2 instances processes sensitive information stored on Amazon S3. The information is accessed over the Internet. The security team is concerned that the Internet connectivity to Amazon S3 is a security risk.

Which solution will resolve the security concern?

- A. Access the data through an Internet Gateway.

- B. Access the data through a VPN connection.

- C. Access the data through a NAT Gateway.

- D.Access the data through a VPC endpoint for Amazon S3

Q3: An organization is building an Amazon Redshift cluster in their shared services VPC. The cluster will host sensitive data.

How can the organization control which networks can access the cluster?

- A. Run the cluster in a different VPC and connect through VPC peering.

- B. Create a database user inside the Amazon Redshift cluster only for users on the network.

- C. Define a cluster security group for the cluster that allows access from the allowed networks.

- D. Only allow access to networks that connect with the shared services network via VPN.

Q4: A web application allows customers to upload orders to an S3 bucket. The resulting Amazon S3 events trigger a Lambda function that inserts a message to an SQS queue. A single EC2 instance reads messages from the queue, processes them, and stores them in an DynamoDB table partitioned by unique order ID. Next month traffic is expected to increase by a factor of 10 and a Solutions Architect is reviewing the architecture for possible scaling problems.

Which component is MOST likely to need re-architecting to be able to scale to accommodate the new traffic?

- A. Lambda function

- B. SQS queue

- C. EC2 instance

- D. DynamoDB table

Q5: An application requires a highly available relational database with an initial storage capacity of 8 TB. The database will grow by 8 GB every day. To support expected traffic, at least eight read replicas will be required to handle database reads.

Which option will meet these requirements?

- A. DynamoDB

- B. Amazon S3

- C. Amazon Aurora

- D. Amazon Redshift

Q6: How can you improve the performance of EFS?

- A. Use an instance-store backed EC2 instance.

- B. Provision more throughput than is required.

- C. Divide your files system into multiple smaller file systems.

- D. Provision higher IOPs for your EFS.

Q7:

If you are designing an application that requires fast (10 – 25Gbps), low-latency connections between EC2 instances, what EC2 feature should you use?

- A. Snapshots

- B. Instance store volumes

- C. Placement groups

- D. IOPS provisioned instances.

Q8: A Solution Architect is designing an online shopping application running in a VPC on EC2 instances behind an ELB Application Load Balancer. The instances run in an Auto Scaling group across multiple Availability Zones. The application tier must read and write data to a customer managed database cluster. There should be no access to the database from the Internet, but the cluster must be able to obtain software patches from the Internet.

Which VPC design meets these requirements?

- A. Public subnets for both the application tier and the database cluster

- B. Public subnets for the application tier, and private subnets for the database cluster

- C. Public subnets for the application tier and NAT Gateway, and private subnets for the database cluster

- D. Public subnets for the application tier, and private subnets for the database cluster and NAT Gateway

Q9: What command should you run on a running instance if you want to view its user data (that is used at launch)?

- A. curl http://254.169.254.169/latest/user-data

- B. curl http://localhost/latest/meta-data/bootstrap

- C. curl http://localhost/latest/user-data

- D. curl http://169.254.169.254/latest/user-data

Q10: A company is developing a highly available web application using stateless web servers. Which services are suitable for storing session state data? (Select TWO.)

- A. CloudWatch

- B. DynamoDB

- C. Elastic Load Balancing

- D. ElastiCache

- E. Storage Gateway

Q11: From a security perspective, what is a principal?

- A. An identity

- B. An anonymous user

- C. An authenticated user

- D. A resource

Q12: What are the characteristics of a tiered application?

- A. All three application layers are on the same instance

- B. The presentation tier is on an isolated instance than the logic layer

- C. None of the tiers can be cloned

- D. The logic layer is on an isolated instance than the data layer

- E. Additional machines can be added to help the application by implementing horizontal scaling

- F. Incapable of horizontal scaling

Q13: When using horizontal scaling, how can a server’s capacity closely match it’s rising demand?

A. By frequently purchasing additional instances and smaller resources

B. By purchasing more resources very far in advance

C. By purchasing more resources after demand has risen

D. It is not possible to predict demand

Q14: What is the concept behind AWS’ Well-Architected Framework?

A. It’s a set of best practice areas, principles, and concepts that can help you implement effective AWS solutions.

B. It’s a set of best practice areas, principles, and concepts that can help you implement effective solutions tailored to your specific business.

C. It’s a set of best practice areas, principles, and concepts that can help you implement effective solutions from another web host.

D. It’s a set of best practice areas, principles, and concepts that can help you implement effective E-Commerce solutions.

Question 127: Which options are examples of steps you take to protect your serverless application from attacks? (Select FOUR.)

A. Update your operating system with the latest patches.

B. Configure geoblocking on Amazon CloudFront in front of regional API endpoints.

C. Disable origin access identity on Amazon S3.

D. Disable CORS on your APIs.

E. Use resource policies to limit access to your APIs to users from a specified account.

F. Filter out specific traffic patterns with AWS WAF.

G. Parameterize queries so that your Lambda function expects a single input.

Question 128: Which options reflect best practices for automating your deployment pipeline with serverless applications? (Select TWO.)

A. Select one deployment framework and use it for all of your deployments for consistency.

B. Use different AWS accounts for each environment in your deployment pipeline.

C. Use AWS SAM to configure safe deployments and include pre- and post-traffic tests.

D. Create a specific AWS SAM template to match each environment to keep them distinct.

Question 129: Your application needs to connect to an Amazon RDS instance on the backend. What is the best recommendation to the developer whose function must read from and write to the Amazon RDS instance?

A. Use reserved concurrency to limit the number of concurrent functions that would try to write to the database

B. Use the database proxy feature to provide connection pooling for the functions

C. Initialize the number of connections you want outside of the handler

D. Use the database TTL setting to clean up connections

Question 130: A company runs a cron job on an Amazon EC2 instance on a predefined schedule. The cron job calls a bash script that encrypts a 2 KB file. A security engineer creates an AWS Key Management Service (AWS KMS) CMK with a key policy.

The key policy and the EC2 instance role have the necessary configuration for this job.

Which process should the bash script use to encrypt the file?

A) Use the aws kms encrypt command to encrypt the file by using the existing CMK.

B) Use the aws kms create-grant command to generate a grant for the existing CMK.

C) Use the aws kms encrypt command to generate a data key. Use the plaintext data key to encrypt the file.

D) Use the aws kms generate-data-key command to generate a data key. Use the encrypted data key to encrypt the file.

Question 131: A Security engineer must develop an AWS Identity and Access Management (IAM) strategy for a company’s organization in AWS Organizations. The company needs to give developers autonomy to develop and test their applications on AWS, but the company also needs to implement security guardrails to help protect itself. The company creates and distributes applications with different levels of data classification and types. The solution must maximize scalability.

Which combination of steps should the security engineer take to meet these requirements? (Choose three.)

A) Create an SCP to restrict access to highly privileged or unauthorized actions to specific AM principals. Assign the SCP to the appropriate AWS accounts.

B) Create an IAM permissions boundary to allow access to specific actions and IAM principals. Assign the IAM permissions boundary to all AM principals within the organization

C) Create a delegated IAM role that has capabilities to create other IAM roles. Use the delegated IAM role to provision IAM principals by following the principle of least privilege.

D) Create OUs based on data classification and type. Add the AWS accounts to the appropriate OU. Provide developers access to the AWS accounts based on business need.

E) Create IAM groups based on data classification and type. Add only the required developers’ IAM role to the IAM groups within each AWS account.

F) Create IAM policies based on data classification and type. Add the minimum required IAM policies to the developers’ IAM role within each AWS account.

Question 132: A company is ready to deploy a public web application. The company will use AWS and will host the application on an Amazon EC2 instance. The company must use SSL/TLS encryption. The company is already using AWS Certificate Manager (ACM) and will export a certificate for use with the deployment.

How can a security engineer deploy the application to meet these requirements?

A) Put the EC2 instance behind an Application Load Balancer (ALB). In the EC2 console, associate the certificate with the ALB by choosing HTTPS and 443.

B) Put the EC2 instance behind a Network Load Balancer. Associate the certificate with the EC2 instance.

C) Put the EC2 instance behind a Network Load Balancer (NLB). In the EC2 console, associate the certificate with the NLB by choosing HTTPS and 443.

D) Put the EC2 instance behind an Application Load Balancer. Associate the certificate with the EC2 instance.

What are the 6 pillars of a well architected framework:

AWS Well-Architected helps cloud architects build secure, high-performing, resilient, and efficient infrastructure for their applications and workloads. Based on five pillars — operational excellence, security, reliability, performance efficiency, and cost optimization — AWS Well-Architected provides a consistent approach for customers and partners to evaluate architectures, and implement designs that can scale over time.

1. Operational Excellence

The operational excellence pillar includes the ability to run and monitor systems to deliver business value and to continually improve supporting processes and procedures. You can find prescriptive guidance on implementation in the Operational Excellence Pillar whitepaper.

2. Security

The security pillar includes the ability to protect information, systems, and assets while delivering business value through risk assessments and mitigation strategies. You can find prescriptive guidance on implementation in the Security Pillar whitepaper.

3. Reliability

The reliability pillar includes the ability of a system to recover from infrastructure or service disruptions, dynamically acquire computing resources to meet demand, and mitigate disruptions such as misconfigurations or transient network issues. You can find prescriptive guidance on implementation in the Reliability Pillar whitepaper.

4. Performance Efficiency

The performance efficiency pillar includes the ability to use computing resources efficiently to meet system requirements and to maintain that efficiency as demand changes and technologies evolve. You can find prescriptive guidance on implementation in the Performance Efficiency Pillar whitepaper.

5. Cost Optimization

The cost optimization pillar includes the ability to avoid or eliminate unneeded cost or suboptimal resources. You can find prescriptive guidance on implementation in the Cost Optimization Pillar whitepaper.

6. Sustainability

- The ability to increase efficiency across all components of a workload by maximizing the benefits from the provisioned resources.

- There are six best practice areas for sustainability in the cloud:

- Region Selection – AWS Global Infrastructure

- User Behavior Patterns – Auto Scaling, Elastic Load Balancing

- Software and Architecture Patterns – AWS Design Principles

- Data Patterns – Amazon EBS, Amazon EFS, Amazon FSx, Amazon S3

- Hardware Patterns – Amazon EC2, AWS Elastic Beanstalk

- Development and Deployment Process – AWS CloudFormation

- Key AWS service:

- Amazon EC2 Auto Scaling

Source: 6 pillards of AWs Well architected Framework

The AWS Well-Architected Framework provides architectural best practices across the five pillars for designing and operating reliable, secure, efficient, and cost-effective systems in the cloud. The framework provides a set of questions that allows you to review an existing or proposed architecture. It also provides a set of AWS best practices for each pillar.

Using the Framework in your architecture helps you produce stable and efficient systems, which allows you to focus on functional requirements.

Other AWS Facts and Summaries and Questions/Answers Dump

- AWS Certified Solution Architect Associate Exam Prep App

- AWS S3 facts and summaries and Q&A Dump

- AWS DynamoDB facts and summaries and Questions and Answers Dump

- AWS EC2 facts and summaries and Questions and Answers Dump

- AWS Serverless facts and summaries and Questions and Answers Dump

- AWS Developer and Deployment Theory facts and summaries and Questions and Answers Dump

- AWS IAM facts and summaries and Questions and Answers Dump

- AWS Lambda facts and summaries and Questions and Answers Dump

- AWS SQS facts and summaries and Questions and Answers Dump

- AWS RDS facts and summaries and Questions and Answers Dump

- AWS ECS facts and summaries and Questions and Answers Dump

- AWS CloudWatch facts and summaries and Questions and Answers Dump

- AWS SES facts and summaries and Questions and Answers Dump

- AWS EBS facts and summaries and Questions and Answers Dump

- AWS ELB facts and summaries and Questions and Answers Dump

- AWS Autoscaling facts and summaries and Questions and Answers Dump

- AWS VPC facts and summaries and Questions and Answers Dump

- AWS KMS facts and summaries and Questions and Answers Dump

- AWS Elastic Beanstalk facts and summaries and Questions and Answers Dump

- AWS CodeBuild facts and summaries and Questions and Answers Dump

- AWS CodeDeploy facts and summaries and Questions and Answers Dump

- AWS CodePipeline facts and summaries and Questions and Answers Dump

What means undifferentiated heavy lifting?

The reality, of course, today is that if you come up with a great idea you don’t get to go quickly to a successful product. There’s a lot of undifferentiated heavy lifting that stands between your idea and that success. The kinds of things that I’m talking about when I say undifferentiated heavy lifting are things like these: figuring out which servers to buy, how many of them to buy, what time line to buy them.

Eventually you end up with heterogeneous hardware and you have to match that. You have to think about backup scenarios if you lose your data center or lose connectivity to a data center. Eventually you have to move facilities. There’s negotiations to be done. It’s a very complex set of activities that really is a big driver of ultimate success.

But they are undifferentiated from, it’s not the heart of, your idea. We call this muck. And it gets worse because what really happens is you don’t have to do this one time. You have to drive this loop. After you get your first version of your idea out into the marketplace, you’ve done all that undifferentiated heavy lifting, you find out that you have to cycle back. Change your idea. The winners are the ones that can cycle this loop the fastest.

On every cycle of this loop you have this undifferentiated heavy lifting, or muck, that you have to contend with. I believe that for most companies, and it’s certainly true at Amazon, that 70% of your time, energy, and dollars go into the undifferentiated heavy lifting and only 30% of your energy, time, and dollars gets to go into the core kernel of your idea.

I think what people are excited about is that they’re going to get a chance they see a future where they may be able to invert those two. Where they may be able to spend 70% of their time, energy and dollars on the differentiated part of what they’re doing.

AWS Certified Solutions Architect Associates Questions and Answers around the web.

Testimonial: Passed SAA-C02!

So my exam was yesterday and I got the results in 24 hours. I think that’s how they review all saa exams, not showing the results right away anymore.

I scored 858. Was practicing with Stephan’s udemy lectures and Bonso exam tests. My test results were as follows Test 1. 63%, 93% Test 2. 67%, 87% Test 3. 81 % Test 4. 72% Test 5. 75 % Test 6. 81% Stephan’s test. 80%

I was reading all question explanations (even the ones I got correct)

The actual exam was pretty much similar to these. The topics I got were:

A lot of S3 (make sure you know all of it from head to toes)

VPC peering

DataSync and Database Migration Service in same questions. Make sure you know the difference

One EKS question

2-3 KMS questions

Security group question

A lot of RDS Multi-AZ

SQS + SNS fan out pattern

ECS microservice architecture question

Route 53

NAT gateway

And that’s all I can remember)

I took extra 30 minutes, because English is not my native language and I had plenty of time to think and then review flagged questions.

Good luck with your exams guys!

Testimonial: Passed SAA-C02

Hey guys, just giving my update so all of you guys working towards your certs can stay motivated as these success stories drove me to reach this goal.

Background: 12 years of military IT experience, never worked with the cloud. I’ve done 7 deployments (that is a lot in 12 years), at which point I came home from the last one burnt out with a family that barely knew me. I knew I needed a change, but had no clue where to start or what I wanted to do. I wasn’t really interested in IT but I knew it’d pay the bills. After seeing videos about people in IT working from home(which after 8+ years of being gone from home really appealed to me), I stumbled across a video about a Solutions Architect’s daily routine working from home and got me interested in AWS.

AWS Solutions Architect SAA Certification Preparation time: It took me 68 days straight of hard work to pass this exam with confidence. No rest days, more than 120 pages of hand-written notes and hundreds and hundreds of flash cards.

In the beginning, I hopped on Stephane Maarek’s course for the CCP exam just to see if it was for me. I did the course in about a week and then after doing some research on here, got the CCP Practice exams from tutorialsdojo.com Two weeks after starting the Udemy course, I passed the exam. By that point, I’d already done lots of research on the different career paths and the best way to study, etc.

Cantrill(10/10) – That same day, I hopped onto Cantrill’s course for the SAA and got to work. Somebody had mentioned that by doing his courses you’d be over-prepared for the exam. While I think a combination of material is really important for passing the certification with confidence, I can say without a doubt Cantrill’s courses got me 85-90% of the way there. His forum is also amazing, and has directly contributed to me talking with somebody who works at AWS to land me a job, which makes the money I spent on all of his courses A STEAL. As I continue my journey (up next is SA Pro), I will be using all of his courses.

Neal Davis(8/10) – After completing Cantrill’s course, I found myself needing a resource to reinforce all the material I’d just learned. AWS is an expansive platform and the many intricacies of the different services can be tricky. For this portion, I relied on Neal Davis’s Training Notes series. These training notes are a very condensed version of the information you’ll need to pass the exam, and with the proper context are very useful to find the things you may have missed in your initial learnings. I will be using his other Training Notes for my other exams as well.

TutorialsDojo(10/10) – These tests filled in the gaps and allowed me to spot my weaknesses and shore them up. I actually think my real exam was harder than these, but because I’d spent so much time on the material I got wrong, I was able to pass the exam with a safe score.

As I said, I was surprised at how difficult the exam was. A lot of my questions were related to DBs, and a lot of them gave no context as to whether the data being loaded into them was SQL or NoSQL which made the choice selection a little frustrating. A lot of the questions have 2 VERY SIMILAR answers, and often time the wording of the answers could be easy to misinterpret (such as when you are creating a Read Replica, do you attach it to the primary application DB that is slowing down because of read issues or attach it to the service that is causing the primary DB to slow down). For context, I was scoring 95-100% on the TD exams prior to taking the test and managed a 823 on the exam so I don’t know if I got unlucky with a hard test or if I’m not as prepared as I thought I was (i.e. over-thinking questions).

Anyways, up next is going back over the practical parts of the course as I gear up for the SA Pro exam. I will be taking my time with this one, and re-learning the Linux CLI in preparation for finding a new job.

PS if anybody on here is hiring, I’m looking! I’m the hardest worker I know and my goal is to make your company as streamlined and profitable as possible. 🙂

Testimonial: How did you prepare for AWS Certified Solutions Architect – Associate Level certification?

Best way to prepare for aws solution architect associate certification

Practical knowledge is 30% important and rest is Jayendra blog and Dumps.

Buying udemy courses doesn’t make you pass, I can tell surely without going to dumps and without going to jayendra’s blog not easy to clear the certification.

Read FAQs of S3, IAM, EC2, VPC, SQS, Autoscaling, Elastic Load Balancer, EBS, RDS, Lambda, API Gateway, ECS.

Read the Security Whitepaper and Shared Responsibility model.

The most important thing is basic questions from the last introduced topics to the exam is very important like Amazon Kinesis, etc…

– ACloudGuru course with practice test’s

– Created my own cheat sheet in excel

– Practice questions on various website

– Few AWS services FAQ’s

– Some questions were your understanding about which service to pick for the use case.

– many questions on VPC

– a couple of unexpected question on AWS CloudHSM, AWS systems manager, aws athena

– encryption at rest and in transit services

– migration from on-premise to AWS

– backup data in az vs regional

I believe the time was sufficient.

Overall I feel AWS SAA was more challenging in theory than GCP Associate CE.

some resources I bookmarked:

- Comparison of AWS Services

- Solutions Architect – Associate | Qwiklabs

- okeeffed/cheat-sheets

- A curated list of AWS resources to prepare for the AWS Certifications

- AWS Cheat Sheet

Whitepapers are the important information about each services that are published by Amazon in their website. If you are preparing for the AWS certifications, it is very important to use the some of the most recommended whitepapers to read before writing the exam.

The following are the list of whitepapers that are useful for preparing solutions architectexam. Also you will be able to find the list of whitepapers in the exam blueprint.

- Overview of Security Processes

- Storage Options in the Cloud

- Defining Fault Tolerant Applications in the AWS Cloud

- Overview of Amazon Web Services

- Compliance Whitepaper

- Architecting for the AWS Cloud

Data Security questions could be the more challenging and it’s worth noting that you need to have a good understanding of security processes described in the whitepaper titled “Overview of Security Processes”.

In the above list, most important whitepapers are Overview of Security Processes and Storage Options in the Cloud. Read more here…

Big thanks to /u/acantril for his amazing course – AWS Certified Solutions Architect – Associate (SAA-C02) – the best IT course I’ve ever had – and I’ve done many on various other platforms:

CBTNuggets

LinuxAcademy

ACloudGuru

Udemy

Linkedin

O’Reilly

- #AWS #SAAC02 #SAAC03 #SolutionsArchitect #AWSSAA #SAA #AWSCertification #AWSTraining #LearnAWS #CloudArchitect #SolutionsArchitect #Djamgatech

If you’re on the fence with buying one of his courses, stop thinking and buy it, I guarantee you won’t regret it! Other materials used for study:

Jon Bonso Practice Exams for SAA-C02 @ Tutorialsdojo (amazing practice exams!)

Random YouTube videos (example)

Official AWS Documentation (example)

TechStudySlack (learning community)

Study duration approximately ~3 months with the following regimen:

Daily study from

30minto2hrsUsually early morning before work

Sometimes on the train when commuting from/to work

Sometimes in the evening

Due to being a father/husband, study wasn’t always possible

All learned topics reviewed weekly

Testimonial: I passed SAA-C02 … But don’t do what I did to pass it

I’ve been following this subreddit for awhile and gotten some helpful tips, so I’d like to give back with my two cents. FYI I passed the exam 788

The exam materials that I used were the following:

AWS Certified Solutions Architect Associate All-in-One Exam Guide (Banerjee)

Stephen Maarek’s Udemy course, and his 6 exam practices

Adrian Cantrill’s online course (about `60% done)

TutorialDojo’s exams

(My company has udemy business account so I was able to use Stephen’s course/exam)

I scheduled my exam at the end of March, and started with Adrian’s. But I was dumb thinking that I could go through his course within 3 weeks… I stopped around 12% of his course and went to the textbook and finished reading the all-in-one exam guide within a weekend. Then I started going through Stephen’s course. While learning the course, I pushed back the exam to end of April, because I knew I wouldn’t be ready by the exam comes along.

Five days before the exam, I finished Stephen’s course, and then did his final exam on the course. I failed miserably (around 50%). So I did one of Stephen’s practice exam and did worse (42%). I thought maybe it might be his exams that are slightly difficult, so I went and bought Jon Bonso’s exam and got 60% on his first one. And then I realized based on all the questions on the exams, I was definitely lacking some fundamentals. I went back to Adrian’s course and things were definitely sticking more – I think it has to do with his explanations + more practical stuff. Unfortunately, I could not finish his course before the exam (because I was cramming), and on the day of the exam, I could only do Bonso’s four of six exams, with barely passing one of them.

Please, don’t do what I did. I was desperate to get this thing over with it. I wanted to move on and work on other things for job search, but if you’re not in this situation, please don’t do this. I can’t for love of god tell you about OAI and Cloudfront and why that’s different than S3 URL. The only thing that I can remember is all the practical stuff that I did with Adrian’s course. I’ll never forget how to create VPC, because he make you manually go through it. I’m not against Stephen’s course – they are different on its own way (see the tips below).

So here’s what I recommend doing before writing for aws exam:

Don’t schedule your exam beforehand. Go through the materials that you are doing, and make sure you get at least 80% on all of the Jon Bonso’s exam (I’d recommend maybe 90% or higher)

If you like to learn things practically, I do recommend Adrian’s course. If you like to learn things conceptually, go with Stephen Maarek’s course. I find Stephen’s course more detailed when going through different architectures, but I can’t really say that because I didn’t really finish Adrian’s course

Jon Bonso’s exam was about the same difficulty as the actual exam. But they’re slightly more tricky. For example, many of the questions will give you two different situation and you really have to figure out what they are asking for because they might contradict to each other, but the actual question is asking one specific thing. However, there were few questions that were definitely obvious if you knew the service.

I’m upset that even though I passed the exam, I’m still lacking some practical stuff, so I’m just going to go through Adrian’s Developer exam but without cramming this time. If you actually learn the materials and practice them, they are definitely useful in the real world. I hope this will help you passing and actually learning the stuff.

P.S I vehemently disagree with Adrian in one thing in his course. doggogram.io is definitely better than catagram.io, although his cats are pretty cool

Testimonial: I passed the SAA-C02 exam!

I sat the exam at a PearsonVUE test centre and scored 816.

The exam had lots of questions around S3, RDS and storage. To be honest it was a bit of a blur but they are the ones I remember.

I was a bit worried before sitting the exam as I was only hit 76% in the official AWS practice exam the night before but it turned out alright in the end!

I have around 8 years of experience in IT but AWS was relatively new to me around 5 weeks ago.

Training Material Used

Firstly I ran through the u/stephanemaarek course which I found to pretty much cover all that was required!

I then used the u/Tutorials_Dojo practice exams. I took one before starting Stephane’s course to see where I was at with no training. I got 46% but I suppose a few of them were lucky guesses!

I then finished the course and took another test and hit around 65%, TD was great as they gave explanations on the answers. I then used this go back to the course to go over my weak areas again.

I then seemed to not be able to get higher than the low 70% on the exams so I went through u/neal-davis course, this was also great as it had an “Exam Cram” video at the end of each topic.

I also set up flashcards on BrainScape which helped me remember AWS services and what their function is.

All in all it was a great learning experience and I look forward to putting my skills into action!

Testimonial: I passed SAA with (799), had about an hour left on the clock.

Many FSx / EFS / Lustre questions

S3 Use cases, storage tiers, cloudfront were pretty prominent too

Only got one “figure out what’s wrong with this IAM policy” question

A handful of dynamodb questions and a handful for picking use cases between different database types or caching layers.

Other typical tips: When you’re unclear on what answer you should pick, or if they seem very similar – work on eliminating answers first. “It can’t be X because oy Y” and that can help a lot.

Testimonial: Passed the AWS Solutions Architect Associate exam!

I prepared mostly from freely available resources as my basics were strong. Bought Jon Bonso’s tests on Udemy and they turned out to be super important while preparing for those particular type of questions (i.e. the questions which feel subjective, but they aren’t), understanding line of questioning and most suitable answers for some common scenarios.

Created a Notion notebook to note down those common scenarios, exceptions, what supports what, integrations etc. Used that notebook and cheat sheets on Tutorials Dojo website for revision on final day.

Found the exam was little tougher than Jon Bonso’s, but his practice tests on Udemy were crucial. Wouldn’t have passed it without them.

Piece of advice for upcoming test aspirants: Get your basics right, especially networking. Understand properly how different services interact in VPC. Focus more on the last line of the question. It usually gives you a hint upon what exactly is needed. Whether you need cost optimization, performance efficiency or high availability. Little to no operational effort means serverless. Understand all serverless services thoroughly.

Testimonial: Passed Solutions Architect Associate (SAA-C02) Today!

I have almost no experience with AWS, except for completing the Certified Cloud Practitioner earlier this year. My work is pushing all IT employees to complete some cloud training and certifications, which is why I chose to do this.

How I Studied:

My company pays for acloudguru subscriptions for its employees, so I used that for the bulk of my learning. I took notes on 3×5 notecards on the key terms and concepts for review.

Once I scored passing grades on the ACG practice tests, I took the Jon Bonso tests on Udemy, which are much more difficult and fairly close to the difficulty of the actual exam. I scored 45%-74% on every Bonso practice test, and spent 1-2 hours after each test reviewing what I missed, supplementing my note cards, and taking time to understand my weak spots. I only took these tests once each, but in between each practice test, I would review all my note cards until I had the content largely memorized.

The Test:

This was one of the most difficult certification tests I’ve ever done. The exam was remote proctored with PearsonVUE (I used PSI for the CCP and didn’t like it as much) I felt like I was failing half the time. I marked about 25% of the questions for review, and I used up the entire allotted time. The questions are mostly about understanding which services interact with which other services, or which services are incompatible with the scenario. It was important for me to read through each response and eliminate the ones that don’t make sense. A lot of the responses mentioned a lot of AWS services that sound good but don’t actually work together (i.e. if it doesn’t make sense to have service X querying database Y, so that probably isn’t the right answer). I can’t point to one domain that really needs to be studied more than any other. You need to know all of the content for the exam.

Final Thoughts:

The ACG practice tests are not a good metric for success for the actual SAA exam, and I would not have passed without Bonso’s tests showing me my weak spots. PearsonVUE is better than PSI. Make sure to study everything thoroughly and review excessively. You don’t necessarily need 5 different study sources and years of experience to be able to pass (although both of those definitely help) and good luck to anyone that took the time to read!

Testimonial: Passed AWS CSAA today!

AWS Certified Solutions Architect Associate

So glad to pass my first AWS certification after 6 weeks of preparation.

My Preparation:

After a series of trial of error in regards to picking the appropriate learning content. Eventually, I went with the community’s advice, and took the course presented by the amazing u/stephanemaarek, in addition to the practice exams by Jon Bonso.

At this point, I can’t say anything that hasn’t been said already about how helpful they are. It’s a great combination of learning material, I appreciate the instructor’s work, and the community’s help in this sub.

Review:

Throughout the course I noted down the important points, and used the course slides as a reference in the first review iteration.

Before resorting to Udemy’s practice exams, I purchased a practice exam from another website, that I regret (not to defame the other vendor, I would simply recommend Udemy).

Udemy’s practice exams were incredible, in that they made me aware of the points I hadn’t understood clearly. After each exam, I would go both through the incorrect answers, as well as the questions I marked for review, wrote down the topic for review, and read the explanation thoroughly. The explanations point to the respective documentation in AWS, which is a recommended read, especially if you don’t feel confident with the service.

What I want to note, is that I didn’t get satisfying marks on the first go at the practice exams (I got an average of ~70%).

Throughout the 6 practice exams, I aggregated a long list of topics to review, went back to the course slides and practice-exams explanations, in addition to the AWS documentation for the respective service.

On the second go I averaged 85%. The second attempt at the exams was important as a confidence boost, as I made sure I understood the services more clearly.

The take away:

Don’t feel disappointed if you get bad results at your practice-exams. Make sure to review the topics and give it another shot.

The AWS documentation is your friend! It is vert clear and concise. My only regret is not having referenced the documentation enough after learning new services.

The exam:

I scheduled the exam using PSI.

I was very confident going into the exam. But going through such an exam environment for the first time made me feel under pressure. Partly, because I didn’t feel comfortable being monitored (I was afraid to get eliminated if I moved or covered my mouth), but mostly because there was a lot at stake from my side, and I had to pass it in the first go.

The questions were harder than expected, but I tried analyze the questions more, and eliminate the invalid answers.

I was very nervous and kept reviewing flagged questions up to the last minute. Luckily, I pulled through.

The take away:

The proctors are friendly, just make sure you feel comfortable in the exam place, and use the practice exams to prepare for the actual’s exam’s environment. That includes sitting in a straight posture, not talking/whispering, or looking away.

Make sure to organize the time dedicated for each questions well, and don’t let yourself get distracted by being monitored like I did.

Don’t skip the question that you are not sure of. Try to select the most probable answer, then flag the question. This will make the very-stressful, last-minute review easier.